“Sometimes it’s better to look integrated than be integrated.” It’s a saying that goes back a few years now and I can’t even remember who coined the phrase first. I do think about the concept from time to time though. It seems most appropriate in meetings such as the one I had last week where a senior IT executive asked me to comment on a 15 page data flow diagram showing how a legacy timekeeping system would be integrated with the system we had recently supplied.

“Sometimes it’s better to look integrated than be integrated.” It’s a saying that goes back a few years now and I can’t even remember who coined the phrase first. I do think about the concept from time to time though. It seems most appropriate in meetings such as the one I had last week where a senior IT executive asked me to comment on a 15 page data flow diagram showing how a legacy timekeeping system would be integrated with the system we had recently supplied.

Fields were mapped representing data in both systems, stored procedures were referred to but not defined. The understanding of how data was to move back and forth between the systems was sketchy at best. What was perhaps most unclear was what benefits were expected to be realized by the organization through this extensive amount of work.

This is not an isolated case. In our work implementing project control environments we’ve had plenty of opportunity to look at extensive ERP implementations where months of effort are allocated for “interfacing” which typically refers to full integration of data between multiple usually non-compatible systems.

What is it that makes this kind of exercise so compelling? Well, it makes for great marketing hype. If you’re in the business of selling man hours for implementing enterprise systems, then this scenario is made in heaven for you. Pretty PowerPoint slides showing lines of data moving between all these systems and suggesting that management will be able to get completely integrated data are enthralling. Not long ago I watched a demonstration showing a financial report on screen which, with a click of a button, dissolved into a pie chart. From there to a histogram and a click on the histogram and the graph broke into a spreadsheet showing multiple suppliers. One more click and this list became another graph and from there down to an individual invoice. To say that this demonstration got the attention of the potential client would be an understatement. These executives were virtually salivating, imagining an ultimate level of control of their own organization’s data.

The truth is not always so easy. In practice, data must often be approved and batched together before analysis. There usually needs to be a defined flow of data from one place to the next and, if as is almost always the case, this data will come from multiple systems which may or may not (probably not) have been designed to integrate their data together.

As I’ve often said in these pages, the most effective analysis of such decisions is one where the Return on Investment is measured. A demonstration which seems delightful is not enough reason to start integrating systems which must be done at an architectural level.

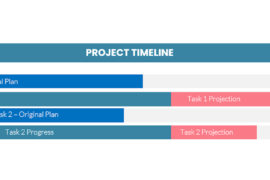

In the 15-page data flow diagram I recently had to confront, data moved from one system to the next, other elements moved back to the first and more elements again moved back to the second. It was difficult even to follow the logic. This might have been worthwhile if there had been some measurable benefit but if there were big gains in efficiency to be found, I certainly couldn’t recognize them. The legacy system was, of course still there but the initial impetus for integrating the two systems seems to have been for the purpose of producing a single report which would show total hours across departments.

Even if such a report had been required it would have taken the tiniest fraction of effort to import the data from the legacy system into the new system in order to produce the report. “Ahh, but that would require intervention every single month,” I was told. “Good point,” is my reply. “However, the 15 minutes it would have taken every month is by far less than the 4 weeks spent writing the integrated design and code and the countless hours spent trying to keep the systems in synch!

Interfaces have another hidden benefit as well. Having to intervene to create the interface is a perfect point of control to manage the data flow. If you’ve got a checklist item in your procedures which says “import the timesheet actuals” then having to physically click an icon or select a menu option and to ensure that it runs is a great place to make sure that item is complete and that all data has move from the source system to the transaction file and from the transaction file to the receiving system as required.

Many finance departments have this in their periscope already. Many systems promote their “open architecture” database as the holy grail of systems integration. There’s no doubt that an open architecture provides more options than a closed architecture but the picture painted is often too close for comfort for finance people. Finance systems are, after all, tough to get stable and once stable, there is a tremendous reluctance to touch them even for upgrades. Every time I’ve suggested that we’d be prepared to intimately integrate the data from a project control system into the accounting system directly, the finance staff have stared at me in horror. No, they say, it would be far preferable to deliver a “transaction file” to finance for importing when they’re darned well good and ready. The idea that an “external” system (for Finance, this mostly means anything outside the GL, AR, AP, PR package) would change cost values in the main accounting system without human intervention is abhorrent to most finance personnel.

While this is true for the systems we’ve been implementing, in many cases it will be equally appropriate for other internal systems to follow the same logic. When an interface can deliver the result at a fraction of the investment of time and effort, then you’ve got to take a careful look at what it’s providing. Far better to interface systems in an organized fashion than to spend the next 6 months trying to determine why the “integrated” system isn’t.